The idea of machines being able to see and act for us is not a new one. It’s been the stuff of science fiction for decades, and is now very much a reality.

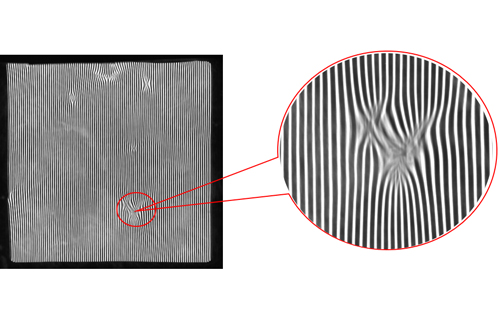

Machine vision came first. This engineering-based system uses existing technologies to mechanically ‘see’ steps along a production line. It helps manufacturers detect flaws in their products before they are packaged, or food distribution companies ensure their foods are correctly labelled, for instance.

Since the development of computer vision, machine vision too is leaping into the future. If we think of machine vision as the body of a system, computer vision is the retina, optic nerve, brain and central nervous system. A machine vision system uses a camera to view an image, computer vision algorithms then process and interpret the image, before instructing other components in the system to act upon that data.

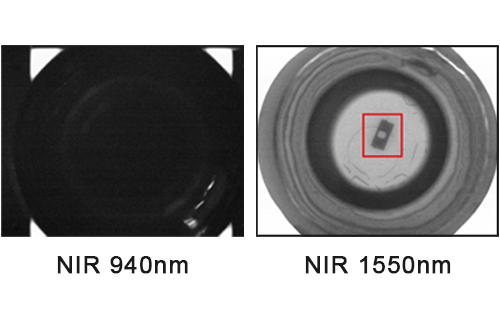

Computer vision can be used alone, without needing to be part of a larger machine system. But a machine vision system doesn’t work without a computer and specific software at its core. This goes way beyond image processing. In computer vision (CV) terms, an image doesn’t even have to be a photo or a video; it could be an ‘image’ from a thermal or infrared sensor, motion detectors or other sources.

Increasingly, computer vision is able to process 3D and moving images as well, including unpredictable observations that earlier iterations of such technology could not handle. Complex operations detect all sorts of features within an image, analyse them and provide rich information about those images.

As computer vision advances, the potential applications for machine vision multiply exponentially. What was once the preserve of heavy industry to determine simple binary actions now appears in the braking systems of autonomous vehicles, compares our faces with our passport photos at airport security gates and helps robots perform surgery.